The Rising Energy Demand of AI: A 2027 Projection

Written on

Understanding AI's Energy Footprint

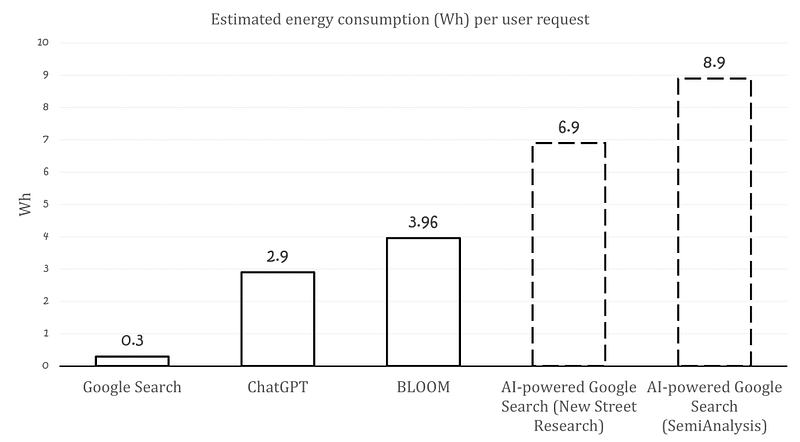

Artificial intelligence is rapidly growing, and so is its energy usage. According to Alex de Vries, a PhD candidate at Vrije Universiteit Amsterdam, replacing every Google search with a large language model (LLM) interaction could equate to the electricity consumption of Ireland. In an article published in the journal Joule on October 10, 2023, he emphasizes the environmental challenges posed by AI.

The energy consumption of advanced models like ChatGPT, Bard, and Claude is substantial. For instance, ChatGPT is based on the GPT-3 model, which consumed approximately 1,287 MWh of electricity during its training. To put this in perspective, that's akin to driving a Tesla Model 3 around the Earth's equator 21,500 times.

The chatbot's initial attempts to illustrate its energy usage through household comparisons were misguided, leading to discrepancies in its calculations. When corrected, the AI graciously acknowledged the error, but this raised doubts about its reliability. Currently, LLMs are likely contributing to increased traditional search engine traffic rather than replacing it.

The energy requirements for training an LLM are well-documented, but this is merely the initial phase. The subsequent phase, known as inference, is less understood but widely experienced. For ChatGPT, inference began upon its launch, and its energy consumption during this phase is estimated at 564 MWh per day, nearly half of what was used during training.

De Vries warns that as the demand for AI services grows, energy consumption linked to AI is expected to rise significantly in the near future. ChatGPT gained an astounding 100 million users within just two months of its launch, prompting a surge in AI-related products.

The first video, "AI's Insatiable Needs Wreak Havoc on Power Systems | Big Take," discusses the energy implications of AI's rapid growth.

The Role of Hardware Constraints

To accommodate every search as an LLM interaction, Google would require over 512,821 NVIDIA AI servers. This figure exceeds NVIDIA’s projected production for 2023, which is around 100,000 servers. Additionally, with NVIDIA holding a near-monopoly in the market, finding alternative suppliers poses a challenge.

Another critical issue is the chip supply. NVIDIA's supplier, TSCM, is facing difficulties in expanding its chip-on-wafer-on-substrate (CoWoS) packaging technology, crucial for producing the necessary chips. Although TSCM is investing in new facilities, they won't yield significant outputs until 2027. By that time, NVIDIA may need up to 1.5 million AI servers, indicating that hardware limitations will be a significant hurdle for years to come.

The second video, "Why does AI pose a huge energy supply problem? | Inside Story," explores the implications of AI on energy supply.

Innovating for Sustainability

Despite these constraints, they may spur innovation. More efficient models and methods will likely emerge, and advancements in quantum computing could transform the landscape for AI energy consumption.

De Vries notes, “The result of making these tools more efficient can lead to broader applications and increased accessibility.” This phenomenon aligns with Jevons’ Paradox, where enhanced efficiency results in lower costs and subsequently increases demand.

Historically, this paradox has been noted multiple times, with LED lighting serving as a prime example; their affordability has led to wider use, ultimately resulting in higher electricity consumption for lighting than ever before. The Sphere in Las Vegas exemplifies this trend.

Such advancements could apply to AI as well. However, without significant innovations to lower energy consumption and secure sustainable energy supplies, it’s crucial to approach AI deployment with caution.

De Vries concludes, “Given the potential growth, we must be judicious about our AI applications. It is energy-intensive, so we must avoid deploying it in areas where it’s unnecessary.”